Our current government time and again has stated that ‘the best way for poor communities to get out of poverty is through employment’. It is true that when poorer students are able to acquire good jobs that they can lift not only themselves, but their communities out of poverty. This has the potential to invigorate local economies which drives the standard of living up. The issue is that marking reliability of essay-based exams is inaccurate!

Although this does not apply universally, grades often determine which university courses students can apply for and thus which careers they end up in. Any child will have heard a teacher or relative recite the mantra that ‘good grades lead to good jobs’. Attainment has a direct correlation to future earning potential, which is why poor families strive harder to use academia as a vehicle for social mobility. But tragically, the way we mark essays is in reality very unreliable. Ofqual have conceded that marking reliability is only 50% when it comes to high-stakes essays. This means potentially 1 in 2 students have the wrong grades for essay-based assessments!

Inaccurate marking is an invisible and seemingly impenetrable barrier impeding social mobility. getting the wrong grade disproportionately affects BAME and working class pupils, as evidenced by Social Mobility Council’s State of the Nation paper:

“People from working class backgrounds are 80 per cent less likely to get into

professional jobs. Even when they do, class plays an important role in pay; within professional occupations, those from working class backgrounds earn 17 per cent less than people from professional backgrounds. Ultimately, class plays an outsized role in a person’s ability to move up the income and jobs ladder, and there has been no measurable improvement in recent years.” Social Mobility council, Sate of the Nation 2018-19, p19

In their seminal research on marking reliability, Meadows and Billington (2005) “…made clear the inherent unreliability associated with assessment in general, and associated with marking in particular.” However, since the grades awarded to students have the potential to ultimately help families out of property, it is not good enough to just accept that marking is unreliable because it is subjective. Meadows and Billington (2005) called for measures of ‘Marking Reliability’ of specific assessments to be published with the results, in order to contextualise the grades. They also examined alternatives such as ‘e-marking’ that “should produce an increase in marking reliability compared to traditional paper based approaches”.

Another reason why marking and feedback sometimes lacks the quality that students need is due to the fact that teachers are overworked as it is! The department of education has made teacher retention a focal point of recent policy; in particular, The Workload Challenge of 2014 called on the Ed-Tech industry to provide innovative solutions to reducing teacher workload. With laborious marking policies designed to ‘evidence learning’ for Ofsted inspections such as ‘triple-impact marking’ or using several different coloured pens to mark, it is no surprise that currently teachers are working 60 hours a week of which 11 hours are spent on marking alone!

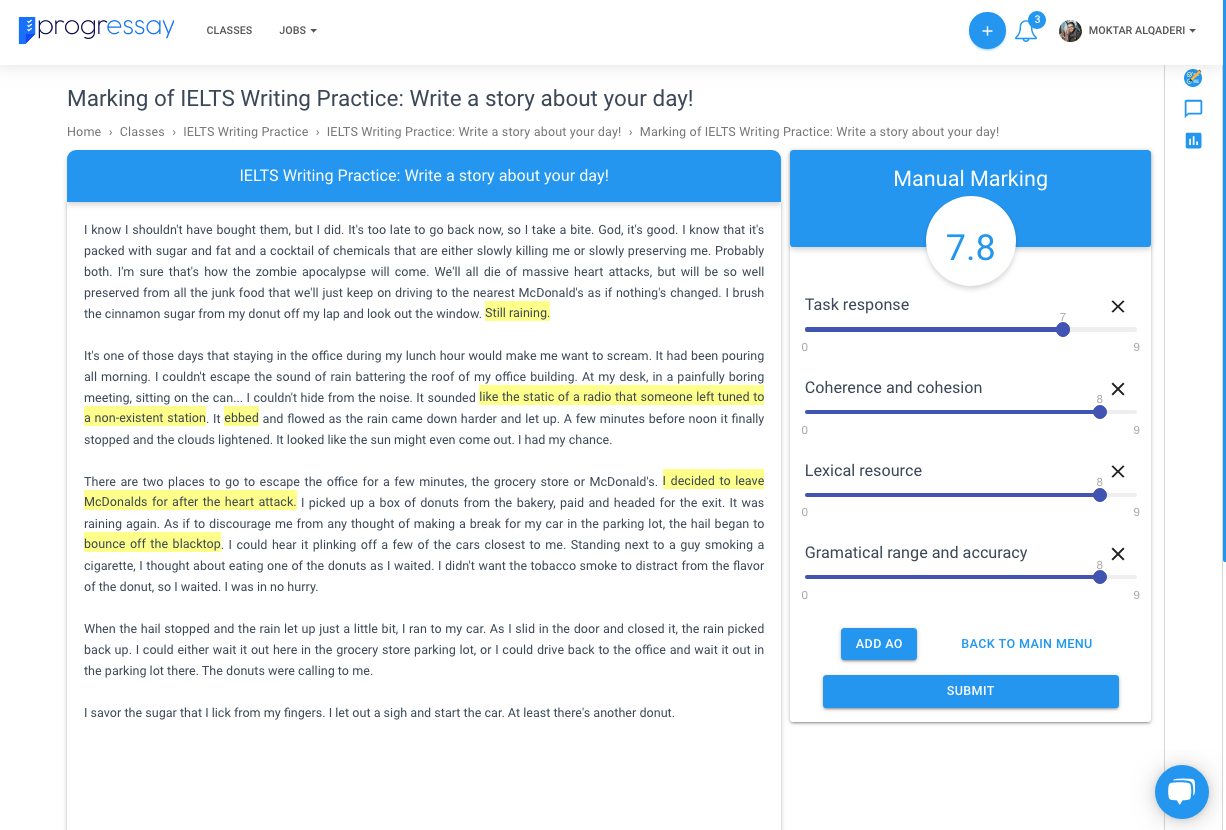

High quality marking is not about how beautiful it appears, but rather how effective and reliable it is for the students! Progressay is an innovative teacher-led marking platform, driven by A.I, that seeks to enhance marking reliability, and reduce marking workload. Progressay has developed a innovative automated essay marking tool that allows teachers to mark in a fraction of the time, provide precise feedback and track progress.

Screenshot of Progressay marking tool:

Research has shown computer-based marking to be reliable. Meadows and Billington (2005) cite Cohen, Ben-Simon and Hovav (2003) study which “reported that the correlation between the number of characters keyed by the candidate, and the scores given by human markers are as high as the correlation between scores given by human markers.” Cohen, Ben-Simon and Hovav (2003) computer-based marking was rooted in linguistic surface features extraction such as the number of characters, sentences, low- frequency words, sentence length, etc.

At Progressay, our mission is to free teachers up from laborious and unreliable marking practices! If you are an educator and would like to see our automated marking tool for yourself, get in touch! To sign up for a free trial, leave your contact details below and a member of the team will reach out shortly.

[hubspot type=form portal=6137992 id=8516465c-aab2-4e2b-8144-37c5e2c50d8f]